Uploaded by

common.user3915

LIMS Validation Approach: A Scientific Article

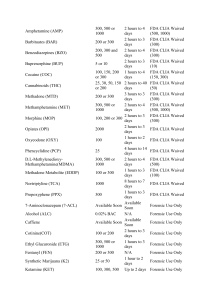

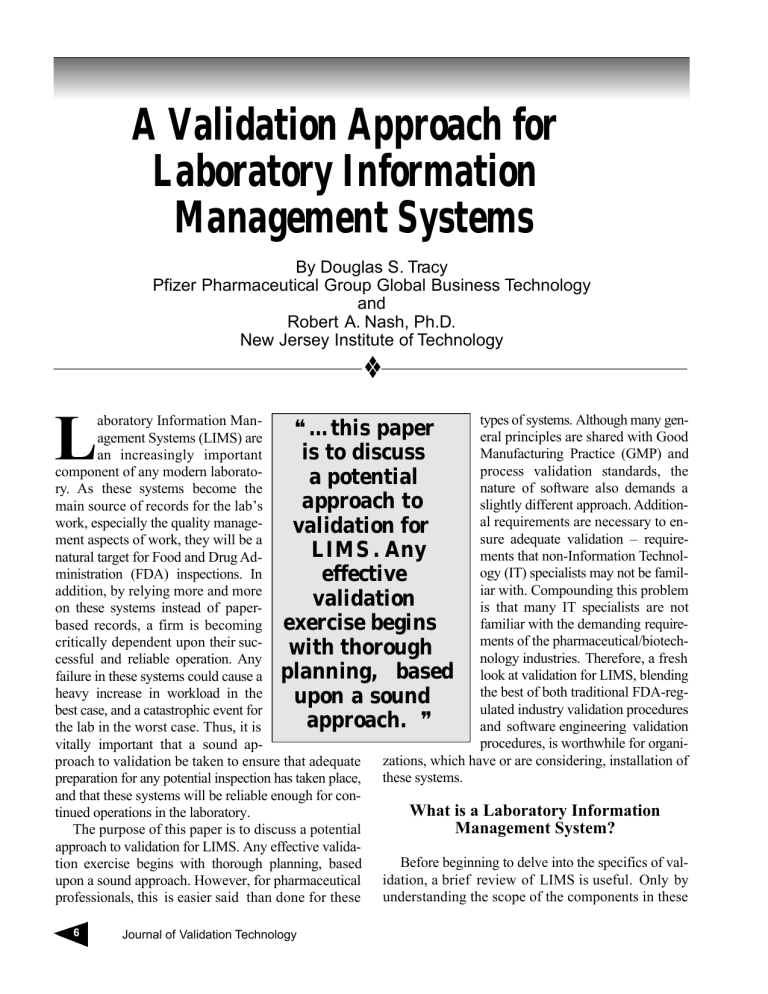

A Validation Approach for Laboratory Information Management Systems By Douglas S. Tracy Pfizer Pharmaceutical Group Global Business Technology and Robert A. Nash, Ph.D. New Jersey Institute of Technology ❖ types of systems. Although many genaboratory Information Man❝…this paper eral principles are shared with Good agement Systems (LIMS) are Manufacturing Practice (GMP) and an increasingly important is to discuss process validation standards, the component of any modern laboratoa potential nature of software also demands a ry. As these systems become the approach to slightly different approach. Additionmain source of records for the lab’s al requirements are necessary to enwork, especially the quality managevalidation for sure adequate validation – requirement aspects of work, they will be a LIMS . Any ments that non-Information Technolnatural target for Food and Drug Adogy (IT) specialists may not be familministration (FDA) inspections. In effective iar with. Compounding this problem addition, by relying more and more validation is that many IT specialists are not on these systems instead of paperfamiliar with the demanding requirebased records, a firm is becoming exercise begins ments of the pharmaceutical/biotechcritically dependent upon their sucwith thorough nology industries. Therefore, a fresh cessful and reliable operation. Any look at validation for LIMS, blending failure in these systems could cause a planning, based the best of both traditional FDA-regheavy increase in workload in the upon a sound ulated industry validation procedures best case, and a catastrophic event for approach. ❞ and software engineering validation the lab in the worst case. Thus, it is procedures, is worthwhile for organivitally important that a sound approach to validation be taken to ensure that adequate zations, which have or are considering, installation of preparation for any potential inspection has taken place, these systems. and that these systems will be reliable enough for conWhat is a Laboratory Information tinued operations in the laboratory. Management System? The purpose of this paper is to discuss a potential approach to validation for LIMS. Any effective validaBefore beginning to delve into the specifics of valtion exercise begins with thorough planning, based upon a sound approach. However, for pharmaceutical idation, a brief review of LIMS is useful. Only by understanding the scope of the components in these professionals, this is easier said than done for these L 6 Journal of Validation Technology Douglas S.Tracy & Robert A. Nash, Ph.D. systems is it possible to create effective validation plans. In addition, since a LIMS itself is often a component of a much larger information system, validation needs may very well extend beyond the LIMS into other systems and processes. Thus, what a LIMS is, and how it may fit into the larger information systems landscape is very important when determining the complete set of validation exercises required. Purpose and Functionality The basic purpose of a LIMS is to assist industry personnel with managing large volumes of information inside a typical laboratory. These systems first appeared in the early 1980s, and were first used to automate the collection and reporting on stability data.1 Along with stability testing, LIMS are also used for process control and document management, providing a flexible and easily accessible platform upon which to develop and store process steps and documentation. As an adjunct to this function, LIMS are very useful platforms for the Quality Control (QC) and Quality Assurance (QA) functions, as they provide a simple way of sampling data and utilizing quality management tools for process monitoring and improvement. Finally, LIMS are also useful as an integrating mechanism, by being able to accept inputs directly from many types of laboratory equipment and coordinating supplies, schedules, etc. with Materials Replenishment Planning (MRP) or Enterprise Resource Planning (ERP) systems used for corporate logistics. Major Components of a LIMS As an example of a specific LIMS, the features of the LabSys LIMS are in Figure 1 (listed by specific module).2 In addition to the standard functionality of most LIMS, there are specialized modules or complete software packages to meet many other needs. For example, the Matrix Plus LIMS from Autoscribe contains a “Quotation Manager” that “allows laboratory and commercial personnel to track the progress of a contract for laboratory analysis work from initial inquiry to receipt of purchase order and the login of samples into the system.” 3 LIMS also come in a wide variety of shapes and sizes, from simple MS Access-based programs for smaller labs, to larger-scale, complex relational database client-server-based systems for larger laboratories. There is even one company, ThermoLabs, which is Figure 1 LabSys LIMS Summary Functions LIMS for Quality Control Set-up and Configuration Sample Management Allows the system to be configured to site-specific requirements Sample lifecycle, including all the standard functionality normally associated with a standalone LIMS system. It supports many additional QA functions Vendor Monitoring Controls different sampling plans, and skips lot testing parameters per vendor/product relationship. It tracks vendor performance and provides vendor performance reports ERP Integration Allows data to be interchanged between LabSys LIMS and ERP systems. Document Allows links to documents and Management Link external document management systems for tests, samples, products, etc. Process LIMS In-Process Sampling Controls sample testing during the manufacturing stages of a batch process. Batch Tree Traceability Full batch traceability with ERP interface Stability LIMS Stability Trial Template Allows definitions of time-points, conditions, and testing to be performed at each stage. Trial Management Used to manage all stability trials, and provide reporting on each. Stability Scheduler Automatically schedules samples for testing when the time-point arrives. Instrument Connect Simple Instruments Collects and passes data from simple instruments, such as bal ances and meters, and can process this before reporting to LIMS. Complex Instruments Collects and passes data from complex PC-controlled instruments, such as High Performance Liquid Chromatography (HPLC) and Gas Chromatography (GC). Can be configured to deliver a worklist from LIMS to the instrument, and subsequently upload the results from the instrument to LIMS. November 2002 • Volume 9, Number 1 7 Douglas S.Tracy & Robert A. Nash, Ph.D. offering their LIMS via an Application Service Provider (ASP) model, where the software is hosted by ThermoLabs. The using company hooks up to the system over a secure Internet connection, and fees are collected by ThermoLabs on a monthly rental basis.4 Overall Validation Approach The key to an overall validation approach is taking a system-level approach to the problem. In other words, not to just validate the individual components of the process – software, hardware, user procedures – but to treat these components as part of an overall system that needs system-level validation. Thus, we must remember not to get too lost in the details, but to focus on the overall outcome for validation, which is in essence a quality assurance process. As the FDA states in their Guidance on General Principles of Process Validation: The basic principles of quality assurance have as their goal the production of articles that are fit for their intended use. The principles may be stated as follows: 1. quality, safety, and effectiveness must be designed and built into the product; 2. quality cannot be inspected or tested into the finished product; and 3. each step of the manufacturing process must be controlled to maximize the probability that the finished product meets all quality and design specifications5 Basics of Validation – Other Key Definitions and Scope We should also keep in mind a few key definitions, as these will be vitally important to outlining a specific plan for our LIMS validation. First of all, we need to review the basic definition of validation according to the FDA, both in the context of process and software. In the FDA Guidance on General Principles of Process Validation, they defines process validation as: Process validation is establishing documented evidence which provides a high degree of assurance that a specific process will consistently produce a product meeting its pre-determined specifications and quality characteristics. 5 8 Journal of Validation Technology The FDA also defines software validation separately in their General Principles of Software Validation guidance document, although in much the same spirit: FDA considers software validation to be “confirmation by examination and provision of objective evidence that software specifications conform to user needs and intended uses, and that the particular requirements implemented through software can be consistently fulfilled.”6 Another key definition in the General Principles of Software Validation document is that of software verification: Software verification provides objective evidence that the design outputs of a particular phase of the software development life cycle meet all of the specified requirements for that phase6 In addition, the definitions of Installation Qualification (IQ), Operational Qualification (OQ), and Performance Qualification (PQ) are well known to most pharmaceutical manufacturing personnel, but bear repeating here as specified by the FDA: Qualification, installation – Establishing confidence that process equipment and ancillary systems are compliant with appropriate codes and approved design intentions, and that manufacturer’s recommendations are suitably considered. Qualification, operational – Establishing confidence that process equipment and ancillary systems are capable of consistently operating with established limits and tolerances. Qualification, process performance – Establishing confidence that the process is effective and reproducible. Qualification, product performance – Establishing confidence through appropriate testing that the finished product produced by a specified process meets all release requirements for functionality and safety.7 Finally, we should keep in mind that validation could become an onerous task if not approached in a reasonable manner. One major consulting firm, Ac- Douglas S.Tracy & Robert A. Nash, Ph.D. centure LLP, estimates that pharmaceutical firms often spend twice as much time and cost to complete validated systems projects – often because of additional requirements imposed by company Standard Operating Procedures (SOPs), and QA personnel that are not mandated in regulations.8 After all, the FDAin its General Principles of Software Validation refers to using the least burdensome approach to meeting the regulatory requirement.6 Thus, while we should take a very serious and deliberate approach to validation, we should focus on assurance of a repeatable and high quality outcome, and not on trying to “boil the ocean” with unnecessary extensive testing and/or overly detailed documentation. Validation Master Plans With these considerations in mind, the key document to produce before starting is a Validation Master Plan (VMP). In this document, we need to outline the major steps we are taking to validate this particular system. While we should make use of available company SOPs and templates, this document should be specific to the problem at hand. It should take a risk-based approach to ensure that efforts are being focused on the most likely trouble spots, while limiting the overall validation effort to one of reasonable size and scope. Although this is similar to what the FDA defines as a “validation protocol,” this document is at a somewhat higher level. Detailed test results and “pass/fail” criteria are not specified, rather the focus is on the guiding principles and scope of the validation effort, as well as a high-level overview of the tasks, costs, and resources required for validation. Other supporting documents are used to provide detailed information on test results for specific validation tasks. Nonetheless, this document is extremely important, for it will provide the baseline for all other validation tasks, and will likely be the first document that any inspector would like to review. While this paper is not attempting to provide a specific outline for a VMP, it will provide the basis upon which to build such a plan. Arguably, the harder part of the plan document is determining an appropriate approach to validation. Once this is done, it is a relatively straightforward exercise to flesh out the details and blend in company SOPs, etc. Thus, the remainder of this paper will concentrate on developing the strategic approach in a generic fashion, with the understanding that this will need to be tailored to an individual company’s situation in order to be “implementable.” System Validation As stated before, the goal of the validation exercise is to have a complete validated system. It is useless to validate part of the system, or the hardware and software separately, and then to assume that they will work together in the end. The validation approach must be holistic, certifying the system as a complete unit – exactly as it is intended to be used operationally. In this regard, there are several major phases in a typical system validation. One is due to the fact that we are almost always buying an off-the-shelf software product, e.g. the basic LIMS package. Thus, since we are not building this ourselves, this aspect of validation must focus on the vendor in an attempt to reasonably satisfy ourselves that they have a sound software development process in place. Another phase is the validation of those parts of the system that are either custombuilt, or configured for our specific implementation of the system. In many cases, the functionality or integration capability of the package may be too generic for our specific purposes – thus we need to have either the system vendor or another systems integration firm do some custom software development work to build the complete system we need. In addition, in almost all situations, a LIMS requires some degree of configuration, ranging from designing in specific workflows, to creating templates for standard lab data sheets or QA reports. In either the custom-built or configured situation, validation is required for all of these activities. Finally, we need to ensure that what we have created will work properly in our environment – thus a final validation step to ensure the system works in our environment is warranted. In summary, we could define our system validation goals as follows: • Did we buy the right product? • Did we add the right features to the product we bought? • Will this customized/configured product function correctly in our production environment? Vendor Validation Although many vendors tout their products as “21 CFR Part 11 compliant” and “fully validated according November 2002 • Volume 9, Number 1 9 Douglas S.Tracy & Robert A. Nash, Ph.D. to FDA standards,” the phrase “caveat emptor” or “buyer beware” should be kept in mind by the organization. Since these companies are software organizations, the FDAis not inspecting them, and their claims may or may not be true. In addition, the final responsibility for validation rests with the pharmaceutical or biotech company—and not with the software product vendor. According to the FDA, in their Guidance for Industry: Computerized Systems Used In Clinical Trials: For software purchased off-the-shelf, most of the validation should have been done by the company that wrote the software. The sponsor or contract research organization should have documentation (either original validation documents or on-site vendor audit documents) of this design level validation by the vendor, and should have itself performed functional testing (e.g., by the use of test data sets) and researched known software limitations, problems, and defect corrections.9 Thus, the organization has a requirement to conduct a reasonable amount of due diligence on the vendor for assurance of validation on their product. So what would be a reasonable approach to validating the vendor entail? Basically what is needed is a two-pronged approach. One is the validation of the product itself, by reviewing the vendor’s documentation on what specific validation tests they conducted. This should include a validation master plan, and a sampling of test results – including any known product limitations or defects. Since software products are extremely complicated, and typically consist of thousands, if not millions, of lines of code, some defects should be expected. In fact, one should be suspicious of any vendor that claims their product is “defect free.” This means that they are not sharing all of the information with you, or worse yet, they have conducted inadequate testing to uncover the bulk of the defects. The other aspect of vendor validation is to look at the vendor’s Software Development Life Cycle (SDLC) process. For this aspect, there are several reasonable approaches, ranging from having the company’s internal IT department conduct a cursory review, to utilizing a report of an independent auditing group against an industry standard, such as International Organization for Standardization (ISO) 9000, TickIT® or Capability Maturity Model (CMM). Again, we are 10 Journal of Validation Technology only trying to meet a reasonable standard here, so spending an abundance of time on the vendor’s SDLC, or reviewing their testing/validation procedures is usually not warranted. If there are concerns, the best approach is usually to pick another vendor and potentially avoid a problem. If there are any lingering concerns, then schedule in more time to the testing phase of the validation plan to fully address these issues. Approaches to Customized System Validation Now that we have a vendor we are comfortable working with, we need to consider how we will validate the inevitable configuration and/or customization that accompany all LIMS implementations. Again, there is a range of approaches available, with some benefits and risks to each approach. GAMP V-Model: Is this really a workable model? The first approach is usually described as using the Good Automated Manufacturing Practice (GAMP) VModel, as cited by a number of companies seeking to sell their validation consulting services.10 This model is illustrated in Figure 2, and is one that is troubling, to say the least. While the pharmaceutical professional may feel comforted by the familiar IQ/OQ/PQ phraseFigure 2 Good Automated Manufacturing Practice V-Model User Requirements Specifications Functional Specifications Related to Related to Detailed Related to Design Specifications System Build Performance Qualification (PQ) Operational Qualification (OQ) Installation Qualification (IQ) Douglas S.Tracy & Robert A. Nash, Ph.D. ology, the model does not fit together conceptually with a logical approach for software system validation. For example, how does the IQ relate to the Detailed Design Specifications? The answer is that it really doesn’t in this context. Looking back to our definition of IQ, we see that this really refers to compliance with appropriate codes, standards, and design intentions. This is exactly what the user requirements document should be stating. For example, a good user requirements document states which applicable regulations (21 CFR Part 11, etc.) need to be considered for the solution. In addition, the user requirements in their final state should reflect the limitations of a particular software package. Although a package is normally selected after the first draft of user requirements, we must often adjust some of the requirements to reflect what is available and achievable with a particular package. If we list out requirements that cannot be achieved within our cost parameters, then we either need to adjust our requirements, or reflect that this will be a custom addition to the selected package. But, in any case, we need to have complete traceability between the user requirements and the final solution – thus the need for adjustment. For this model to be correct, we could possibly substitute Design Qualification (DQ) for IQ, and move the IQ across from the user requirements, but it doesn’t solve our problem completely, because we still don’t have an equivalent for OQ and PQ. In addition, we need to think about the need for intermediate testing. In fact, the FDAstates that “typically, testing alone cannot fully verify that software is complete and correct. In addition to testing, other verification techniques and a structured and documented development process should be combined to ensure a comprehensive validation approach.”6 As another reference, the Institute of Electrical and Electronics Engineers (IEEE), one of the key standards settings bodies for software engineering, refers to Validation and Verification as complementary concepts.11 Thus, this model is not only flawed with respect to terminology, it is fundamentally incomplete. The Software Engineering V-Model: What about IQ/OQ/PQ? With the limitations of the previous GAMP Vmodel in mind, we can take a look at a standard software engineering approach to the V-model. Here we see a much more comprehensive approach taken to verification as a prerequisite to validation. As de- picted in Figure 3, we see that for each stage of refinement from the user requirements, there is a verification process.12 What happens in this verification process is that a review of how well the output of a particular stage maps back to the previous stage takes place. This can take the form of a formal architecture/design/code review, or a series of more informal “structured walkthroughs.” In either case, the outcome is the same, and we are looking for where there were potential gaps or poor handoffs between the stages. This is critically important for two reasons. The first is that these tasks are often done by different people, and/or sub-teams with specific skill sets, so some miscommunication or misunderstanding is likely. Secondly, as we understand more and more about the solution, it is likely that additional clarifying questions will be asked about the overall solution. Some of these questions may prompt a modification to the output of the previous stage. Thus, to keep everything in synch, we need to perform the verification step. The other difference from the GAMP model is that we now have traceability, along similar levels of detail for the purposes of designing tests. Specific code modules are tested by unit testing (both a verification and Figure 3 Software Engineering V-Model User Requirements Specifications Related to User Acceptance Testing Verification High-Level Design and Architecture Related to System Testing Verification Detailed Related to Design Specifications Integration Testing Verification Unit Coding Unit Testing November 2002 • Volume 9, Number 1 11 Douglas S.Tracy & Robert A. Nash, Ph.D. validation step), the detailed design is tested by integration testing among code modules, and the overall system design and user requirements are tested by both system testing and user acceptance testing (the difference being that system testing is conducted by software developers, and is typically more comprehensive. User acceptance testing is performed by end users, and is typically somewhat more cursory). The danger with this approach, or perhaps the question it raises, is just how far to go with testing. The best way to gauge this is to take a risk analysis approach. Thus, by looking at the risk inherent in failures of various parts of the system, we can test to a reasonable level, and avoid spending too much time on testing the detail.13 However, even though this model is much more comprehensive than the GAMP model, it still lacks some final testing and validation. Remember, the overall goal is to have a system that supports a particular process in a verifiable and reproducible manner. In this model, although the system is tested and accepted by the user, there is no specific equivalent to the OQ and PQ concepts as discussed before. Thus, we need another level of validation to complete our validation approach. A Combined V-Model for Pharmaceutical Systems While the two previous models both had their shortcomings, if we take both of them together, all of the requirements of a comprehensive validation approach are covered. Both the detailed validation of software development activities (configuration and/or customization), and the overall process aspects are tested and confirmed. Thus, we have a model (as depicted in Figure 4) that combines the GAMP V-model and the software engineering Vmodel into one logical flow. The starting point is the user requirements, as with the Software Engineering (SE) approach, and the flow continues along the SE approach until the User Acceptance Testing (UAT) as before. However, there is one important point of exception. That is, the UAT and the IQ can be done at the same time, since they are fulfilling similar goals, but for slightly different audiences. The UAT is the opportunity for the end users to confirm that the system fulfills the system expectations, including compliance with appropriate regulations. The IQ is the opportunity for the technical support staff to confirm that the system (both hardware and software), can be installed in an operational environment in a manner that is both repeatable and ver- Figure 4 Extended V-Model for Pharmaceutical System User Requirements Specifications Related to User Acceptance Testing/IQ Verification High-Level Design and Architecture Related to System Testing Verification Detailed Related to Design Specifications Integration Testing Verification Unit Coding Unit Testing 12 Journal of Validation Technology Operational Qualification Performance Qualification Douglas S.Tracy & Robert A. Nash, Ph.D. ifiable via testing. A successful conclusion to both the UAT and IQ is a system that is functionally and operationally qualified to place in the operations environment. From this point on, a more traditional OQ and PQ approach applies, as the system should be “stress tested” and confirmed for acceptable operations and continuing performance in the actual operating environment. In addition, although we have broken out the UAT/IQ from the OQ/PQ, this may be easily combined into one comprehensive set of testing if the UAT/IQ takes place in the actual operations environment. This is usually possible if the system is going into a “greenfield” environment where it is not replacing another system. However, if the system is replacing another, most likely the UAT/IQ will take place in a pilot environment – either to reduce risk or keep the old system running until there is sufficient confidence in the new system to shut down the old system. In either case, we must be vigilant about ensuring the adequacy of final testing, as there is a tendency to rush through after the UAT phase. Although everyone is anxious to get the new system into production, many UATs are not comprehensive enough to fulfill both functional and operational testing needs. Therefore, we should be very cautious about arbitrarily combining the UAT and the OQ/PQ into one testing phase. When in doubt, keep the phases distinct to avoid confusion and unnecessary risk in the process. Preparing for Inspections and Ongoing Validation While having the basic validation process well in hand is a must, there are a couple of other issues concerning validation that are almost as crucial. One is the documentation of the approach, plan, and results of validation in a form that is not only logical, but can easily be presented to any potential inspector – internal or external. The other is the need for ongoing validation of changes to the software or process. Simply validating the process one time is never enough. There will always be improvements to the process, bug fixes to the software, new releases of the software, etc. that will require ongoing validation for the overall process to remain “qualified.” Thus, a few additional words on these topics are in order. Presentation of Validation Information One of the goals of validation is to be able to demonstrate quickly to an outsider (management, internal auditors, FDA, etc.) that the process/system is indeed validated. Thus, early on in the process, part of the validation approach should be a consideration of what the required documents are, and how they should be organized and stored. This will allow a top-down approach to documentation, and make it much easier to track through the validation process. In addition, there may be some overall validation efficiencies gained from following such an approach. As noted by the consulting firm, Accenture, documentation is one of the key reasons for additional cost with a validated system.8 By focusing early on the documentation strategy, including defining the hierarchy of documents and constructing templates to guide the work, we can avoid some of the cost of excessive documentation, while ensuring that we have adequate documentation. Finally, we should make sure to implement a good version control process on our documentation. Most pharmaceutical firms have some type of document management system/process already in hand for this purpose, and it should clearly be used to store versioned copies of the system validation documentation. Change Management – The Need for Ongoing Validation Once we have a validated system in place, the work is not over for our validation approach. We must ensure that there is an effective change management process in place both to determine the potential benefits/costs/effects of changes, and to ensure that we test appropriately. From a validation perspective, there are really three things to keep in mind. First of all, we must test the actual changed code itself, which is pretty obvious and straightforward. In addition, we must conduct what is referred to as “regression testing” to test system functionality that was not directly changed, but may have been inadvertently changed as a result of another change. In this area, obviously a great deal of judgment must be employed to avoid both over-testing, by redoing all of the tests, and under-testing, by making too many assumptions about what the change will or will not affect. One approach to this is to conduct both testing of areas that may be likely to experience a side effect of the change, and a limited sample of November 2002 • Volume 9, Number 1 13 Douglas S.Tracy & Robert A. Nash, Ph.D. the other tests to confirm that the assumptions were appropriate. A good reference when developing a change management plan is to review IEEE Std 1042-1987, IEEE Guide for Software Configuration Management as a baseline for the change.14 3. 4. 5. Conclusion 6. Successful validation of a LIMS is a challenging task, but one that can be met if a sound approach to the problem is used. Traditional process manufacturing validation techniques are not enough, and software engineering techniques don’t address all of the concerns of pharmaceutical manufacturers. However, by bringing together the best of the two into a blended approach, a successful strategy can be formulated. When this approach is combined with a sound documentation plan and ongoing change management, the fundamentals will be in place to not only have a soundly verified LIMS, but one that is able to pass the most demanding FDA inspection as well. ❏ 7. 8. 9. 10. 11. 12. 13. 14. About the Author Douglas S. Tracy is the Director, Global Business Technology for the Pfizer Pharmaceutical Group (PPG). His current responsibilities focus on systems and information processes within the safety and regulatory affairs area of PPG. He has over 20 years of operational and information technology management in both the public and private sectors. Doug holds a BSEE with honors from the U.S. Naval Academy, an MBA with honors from Duke University, and an MS in Software Development and Management from the Rochester Institute of Technology. He can be reached by phone at 212-733-5947, or e-mail at Douglas.tracy@pfizer.com. Robert A. Nash, Ph.D., is Associate Professor of Industrial Pharmacy at the New Jersey Institute of Technology. He has over 24 years in the pharmaceutical industry with Merck, Lederle Laboratories, and The Purdue Frederick Company. Dr. Nash is co-editor of Pharmaceutical Process Validation, published by Marcel Dekker and Co. He can be reached by phone at 201-818-0711, or by fax at 201-236-1504. References 1. 2. 14 Brush, M. “LIMS Unlimited.” The Scientist. 2001, Vol. 15, No. 11. Pp. 22-29. LabSys Ltd. System Appreciation Guide 2.1: LabSys LIMS. http://www.labsys.ie. March, 2002. Journal of Validation Technology Autoscribe, Ltd. Plus Points newsletter #2. http://www.autoscribe.co.uk. ThermoLabSystems, Inc. Pathfinder Global Services Group Overview.http://www.thermolabsystems.com/services/pathfin der/nautilus-asp, May, 2002. FDA. Guideline on General Principles of Process Validation. Division of Manufacturing and Product Quality (HFD-320), Office of Compliance, Center for Drug Evaluation and Research. May, 1987. FDA. General Principles of Software Validation; Final Guidance for Industry and FDA Staff. Center for Devices and Radiological Health. January 11, 2002. FDA. Glossary of Computerized System And Software Development Terminology. F D AO ffice of Regulatory Affairs Inspector’s Reference. 1994. Accenture LLP. Computer Systems Validation: Status, Trends, and Potential. Accenture LLP. White Papers. 2001. FDA. Guidance for Industry: Computerized Systems Used In Clinical Trials. FDA Office of Regulatory Affairs Inspector. April, 1999. Invensys, Inc. Overview of Validation Consulting Services. http://www.invensys.com. May, 2002. Institute of Electrical and Electronics Engineers. IEEE Standard for Software Verification and Validation, IEEE Std 1012-1998. IEEE Software Engineering Standards. Vol. 2. New York: 1998 Forsberg, K., Mooz, H., and Cotterman, H. Visualizing Project Management – 2nd Edition. John Wiley & Sons, Inc. New York. 2000. Walsh, B. and Johnson, G. “Validation: Never an Endpoint: A Systems Development Life Cycle Approach to Good Clinical Practice.” Drug Information Journal. 2001, Vol. 35. Pp. 809-817. Institute of Electrical and Electronics Engineers. IEEE Guide to Software Configuration Management, IEEE Std 1042-1987. IEEE Software Engineering Standards, Vol. 2. New York: 1998. Article Acronym Listing ASP: CMM: DQ: ERP: FDA: GAMP: GMP: IEEE: ISO: IQ: IT: LIMS: MRP: OQ: PQ: QA: QC: SDLC: SE: SOP: UAT: VMP: Application Service Provider Capability Maturity Model Design Qualification Enterprise Resource Planning Food and Drug Administration Good Automated Manufacturing Practice Good Manufacturing Practice Institute of Electrical and Electronics Engineers International Organization for Standardization Installation Qualification Information Technology Laboratory Information Management Systems Materials Replenishment Planning Operational Qualification Performance Qualification Quality Assurance Quality Control Software Development Life Cycle Software Engineering Standard Operating Procedure User Acceptance Testing Validation Master Plan